Step 1.

Install Solaris 10u9 from DVD (Full + OEM) ZFS root without seperate /var

Install Solaris 10u9 from DVD (Full + OEM) ZFS root without seperate /var

{0} ok boot cdrom

- Profile------------------------------------------------------

The information shown below is your profile for installing Solaris software.

It reflects the choices you've made on previous screens.

===============================================================

Installation Option: Initial

Boot Device: c0t0d0

Root File System Type: ZFS

Client Services: None

Regions: North America

System Locale: U.S.A. (UTF-8) ( en_US.UTF-8 )

Software: Solaris 10, Entire Distribution plus OEM su

Pool Name: rpool

Boot Environment Name: s10u9

Pool Size: 286091 MB

Devices in Pool: c0t0d0

----------------------------------------------------------------

Esc-2_Begin Installation F4_Change F5_Exit F6_Help

Preparing system for Solaris install

Configuring disk (c0t0d0)

- Creating Solaris disk label (VTOC)

- Creating pool rpool

- Creating swap zvol for pool rpool

- Creating dump zvol for pool rpool

Creating and checking file systems

- Creating rpool/ROOT/s10u9 dataset

Beginning Solaris software installation

Solaris Initial Install

MBytes Installed: 595.40

MBytes Remaining: 3615.16

Installing: Sun OpenGL for Solaris Runtime Libraries

/

| | | | | |

0 20 40 60 80 100

Solaris 10 software installation succeeded

Customizing system files

- Mount points table (/etc/vfstab)

- Unselected disk mount points (/var/sadm/system/data/vfstab.unselected)

- Network host addresses (/etc/hosts)

- Environment variables (/etc/default/init)

Cleaning devices

Customizing system devices

- Physical devices (/devices)

- Logical devices (/dev)

Installing boot information

- Installing boot blocks (c0t0d0s0)

- Installing boot blocks (/dev/rdsk/c0t0d0s0)

Installation log location

- /a/var/sadm/system/logs/install_log (before reboot)

- /var/sadm/system/logs/install_log (after reboot)

Installation complete

Executing SolStart postinstall phase...

Executing finish script "patch_finish"...

Finish script patch_finish execution completed.

Executing JumpStart postinstall phase...

The begin script log 'begin.log'

is located in /var/sadm/system/logs after reboot.

The finish script log 'finish.log'

is located in /var/sadm/system/logs after reboot.

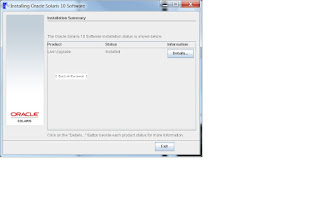

Launching installer. Please Wait...

Installing Additional Software

|-1%--------------25%-----------------50%-----------------75%--------------100%|

Pausing for 30 seconds at the "Summary" screen. The wizard will continue to

the next step unless you select "Pause". Enter 'p' to pause. Enter 'c' to

continue. [c]

Creating boot_archive for /a

updating /a/platform/sun4u/boot_archive

syncing file systems... done

rebooting...

Resetting...

POST Sequence 01 CPU Check

POST Sequence 02 Banner

LSB#00 (XSB#00-0): POST 2.15.0 (2010/10/06 14:23)

POST Sequence 03 Fatal Check

POST Sequence 04 CPU Register

POST Sequence 05 STICK

POST Sequence 06 MMU

POST Sequence 07 Memory Initialize

POST Sequence 08 Memory

POST Sequence 09 Raw UE In Cache

POST Sequence 0A Floating Point Unit

POST Sequence 0B SC

POST Sequence 0C Cacheable Instruction

POST Sequence 0D Softint

POST Sequence 0E CPU Cross Call

POST Sequence 0F CMU-CH

POST Sequence 10 PCI-CH

POST Sequence 11 Master Device

POST Sequence 12 DSCP

POST Sequence 13 SC Check Before STICK Diag

POST Sequence 14 STICK Stop

POST Sequence 15 STICK Start

POST Sequence 16 Error CPU Check

POST Sequence 17 System Configuration

POST Sequence 18 System Status Check

POST Sequence 19 System Status Check After Sync

POST Sequence 1A OpenBoot Start...

POST Sequence Complete.

SPARC Enterprise M4000 Server, using Domain console

Copyright (c) 1998, 2011, Oracle and/or its affiliates. All rights reserved.

Copyright (c) 2011, Oracle and/or its affiliates and Fujitsu Limited. All rights reserved.

OpenBoot 4.33.0.a, 131072 MB memory installed, Serial #954xxxx.

Ethernet address 0:21:28:xx:xx:xx, Host ID: 85xxxxxx.

Rebooting with command: boot

Boot device: disk:a File and args:

SunOS Release 5.10 Version Generic_142909-17 64-bit

Copyright (c) 1983, 2010, Oracle and/or its affiliates. All rights reserved.

Hostname: m4k-d01

Configuring devices.

Loading smf(5) service descriptions: 187/187

Reading ZFS config: done.

Mounting ZFS filesystems: (5/5)

Creating new rsa public/private host key pair

Creating new dsa public/private host key pair

m4k-d01 console login:

Step 2.

bash-3.00# zpool status

pool: rpool

state: ONLINE

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

c0t0d0s0 ONLINE 0 0 0

bash-3.00# zpool create -f zonedata c0t1d0s0

bash-3.00# zpool status zonedata

pool: zonedata

state: ONLINE

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

zonedata ONLINE 0 0 0

c0t1d0s0 ONLINE 0 0 0

errors: No known data errors

Step 3.

If I need /usr/local/ in the non-global zones,

this will require that I "mkdir /usr/local/" in the Global Zone.

bash-3.00# cd /usr/

bash-3.00# mkdir local

bash-3.00# ls -ld /usr/local

drwxr-xr-x 2 root root 2 Dec 27 10:18 /usr/local

However I am placing the lofs /usr/local/ into /opt/"ZONENAME"/local

bash-3.00# mkdir -p /opt/zone1/local

bash-3.00# ls -ld /opt/zone1/local

drwxr-xr-x 2 root root 2 Dec 27 10:19 /opt/zone1/local

Contents of the zone template file:

bash-3.00# luactivate s10u10

A Live Upgrade Sync operation will be performed on startup of boot environment <s10u10>.

**********************************************************************

The target boot environment has been activated. It will be used when you reboot. NOTE: You MUST NOT USE the reboot, halt, or uadmin commands. You MUST USE either the init or the shutdown command when you reboot. If you do not use either init or shutdown, the system will not boot using the target BE.

**********************************************************************

In case of a failure while booting to the target BE, the following process needs to be followed to fallback to the currently working boot environment:

1. Enter the PROM monitor (ok prompt).

2. Boot the machine to Single User mode using a different boot device

(like the Solaris Install CD or Network). Examples:

At the PROM monitor (ok prompt):

For boot to Solaris CD: boot cdrom -s

For boot to network: boot net -s

3. Mount the Current boot environment root slice to some directory (like

/mnt). You can use the following commands in sequence to mount the BE:

zpool import rpool

zfs inherit -r mountpoint rpool/ROOT/s10u9

zfs set mountpoint=<mountpointName> rpool/ROOT/s10u9

zfs mount rpool/ROOT/s10u9

4. Run <luactivate> utility with out any arguments from the Parent boot

environment root slice, as shown below:

<mountpointName>/sbin/luactivate

5. luactivate, activates the previous working boot environment and

indicates the result.

6. Exit Single User mode and reboot the machine.

**********************************************************************

Modifying boot archive service

Activation of boot environment <s10u10> successful.

okay so I rebooted into the newly upgraded BE of Solaris 10 u10.

I checked my zone status and it does not look good initially:

bash-3.2# zoneadm list -civ

ID NAME STATUS PATH BRAND IP

0 global running / native shared

- zone1 installed /zonedata/zone1-s10u10 native shared

What is this "-s10u10" on my zone????

I don't know but I'll try to boot the zone:

bash-3.2# zoneadm -z zone1 boot

zoneadm: zone 'zone1': zone is already booted

Strange. Looks like some initial cleanup perhaps and then it booted okay. This is starting to look good.

Now I see what I would expect to see:

bash-3.2# zoneadm list -civ

ID NAME STATUS PATH BRAND IP

0 global running / native shared

5 zone1 running /zonedata/zone1 native shared

bash-3.2# cat /etc/release

Oracle Solaris 10 8/11 s10s_u10wos_17b SPARC

Copyright (c) 1983, 2011, Oracle and/or its affiliates. All rights reserved.

Assembled 23 August 2011

bash-3.2# lustatus

Boot Environment Is Active Active Can Copy

Name Complete Now On Reboot Delete Status

-------------------------- -------- ------ --------- ------ ----------

s10u9 yes no no yes -

s10u10 yes yes yes no -

Step 2.

bash-3.00# zpool status

pool: rpool

state: ONLINE

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

c0t0d0s0 ONLINE 0 0 0

bash-3.00# zpool create -f zonedata c0t1d0s0

bash-3.00# zpool status zonedata

pool: zonedata

state: ONLINE

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

zonedata ONLINE 0 0 0

c0t1d0s0 ONLINE 0 0 0

errors: No known data errors

Step 3.

If I need /usr/local/ in the non-global zones,

this will require that I "mkdir /usr/local/" in the Global Zone.

bash-3.00# cd /usr/

bash-3.00# mkdir local

bash-3.00# ls -ld /usr/local

drwxr-xr-x 2 root root 2 Dec 27 10:18 /usr/local

However I am placing the lofs /usr/local/ into /opt/"ZONENAME"/local

bash-3.00# mkdir -p /opt/zone1/local

bash-3.00# ls -ld /opt/zone1/local

drwxr-xr-x 2 root root 2 Dec 27 10:19 /opt/zone1/local

Contents of the zone template file:

create -b (the dash b means create a blank zone)

set zonepath=/zonedata/zone1

set autoboot=true

set scheduling-class=FSS

set ip-type=shared

add inherit-pkg-dir

set dir=/lib

end

add inherit-pkg-dir

set dir=/platform

end

add inherit-pkg-dir

set dir=/sbin

end

add inherit-pkg-dir

set dir=/usr

end

add fs

set dir=/usr/local

set special=/opt/zone1/local

set type=lofs

end

add capped-memory

set physical=16G

set locked=8g

set swap=16g

end

add net

set address=10.10.10.100/24

set physical=bge0

set defrouter=10.10.10.1

end

Now build the zone via the template:

bash-3.00# zonecfg -z zone1 -f /var/tmp/zone1

bash-3.00# zonecfg -z zone1

zonecfg:zone1> info

zonename: zone1

zonepath: /zonedata/zone1

brand: native

autoboot: true

bootargs:

pool:

limitpriv:

scheduling-class: FSS

ip-type: shared

hostid:

inherit-pkg-dir:

dir: /lib

inherit-pkg-dir:

dir: /platform

inherit-pkg-dir:

dir: /sbin

inherit-pkg-dir:

dir: /usr

fs:

dir: /usr/local

special: /opt/zone1/local

raw not specified

type: lofs

options: []

net:

address: 10.10.10.100/24

physical: bge0

defrouter: 10.10.10.1

capped-memory:

physical: 16G

[swap: 16G]

[locked: 8G]

rctl:

name: zone.max-locked-memory

value: (priv=privileged,limit=8589934592,action=deny)

rctl:

name: zone.max-swap

value: (priv=privileged,limit=17179869184,action=deny)

Next step is to install the zone:

bash-3.00# zoneadm -z zone1 install

A ZFS file system has been created for this zone.

Preparing to install zone <zone1>.

Creating list of files to copy from the global zone.

Copying <3094> files to the zone.

Initializing zone product registry.

Determining zone package initialization order.

Preparing to initialize <1338> packages on the zone.

Initialized <1338> packages on zone.

Zone <zone1> is initialized.

The file </zonedata/zone1/root/var/sadm/system/logs/install_log> contains a log of the zone installation.

Last step is to copy a SYSIDCFG over to the zone:

bash-3.00# cat /var/tmp/sysidcfg

timezone=US/Eastern

system_locale=en_US.UTF-8

terminal=vt100

network_interface=PRIMARY {hostname=zone1}

root_password=QBfUglMMOVERw

security_policy=none

name_service=NONE

nfs4_domain=dynamic

bash-3.00# ls -l /zonedata/zone1/root/etc/sysidcfg

-rw-r--r-- 1 root root 191 Dec 27 10:30

/zonedata/zone1/root/etc/sysidcfg

bash-3.00# zoneadm -z zone1 boot; zlogin -C zone1

[Connected to zone 'zone1' console]

Hostname: zone1

Loading smf(5) service descriptions: 155/155

Reading ZFS config: done.

Creating new rsa public/private host key pair

Creating new dsa public/private host key pair

Configuring network interface addresses: bge0.

rebooting system due to change(s) in /etc/default/init

[NOTICE: Zone rebooting]

SunOS Release 5.10 Version Generic_142909-17 64-bit

Copyright (c) 1983, 2010, Oracle and/or its affiliates. All rights reserved.

Hostname: zone1

Reading ZFS config: done.

zone1 console login: Dec 27 13:32:34 zone1 sendmail[8797]: My unqualified host name (zone1) unknown; sleeping for retry

Dec 27 13:32:34 zone1 sendmail[8801]: My unqualified host name (zone1) unknown; sleeping for retry

root

Password:

Dec 27 13:32:37 zone1 login: ROOT LOGIN /dev/console

Oracle Corporation SunOS 5.10 Generic Patch January 2005

SunOS tais-m4k-d01 5.10 Generic_142909-17 sun4u sparc SUNW,SPARC-Enterprise

bash-3.00# zoneadm list -civ

ID NAME STATUS PATH BRAND IP

0 global running / native shared

2 zone1 running /zonedata/zone1 native shared

Step 4.

Solaris 10 requires some patches before we can use LiveUpgrade.

Per Oracle Document: 1004881.1

SPARC:

bash-3.00# patchadd 119254-82

Validating patches...

Loading patches installed on the system...

Done!

Loading patches requested to install.

Done!

Checking patches that you specified for installation.

Done!

Approved patches will be installed in this order:

119254-82

Preparing checklist for non-global zone check...

Checking non-global zones...

This patch passes the non-global zone check.

119254-82

Summary for zones:

Zone zone1

Rejected patches:

None.

Patches that passed the dependency check:

119254-82

Patching global zone

Adding patches...

Checking installed patches...

Executing prepatch script...

Installing patch packages...

Patch 119254-82 has been successfully installed.

See /var/sadm/patch/119254-82/log for details

Executing postpatch script...

Patch packages installed:

SUNWinstall-patch-utils-root

SUNWpkgcmdsr

SUNWpkgcmdsu

SUNWswmt

Done!

Patching non-global zones...

Patching zone zone1

Adding patches...

Checking installed patches...

Executing prepatch script...

Installing patch packages...

Patch 119254-82 has been successfully installed.

See /var/sadm/patch/119254-82/log for details

Executing postpatch script...

Patch packages installed:

SUNWinstall-patch-utils-root

SUNWpkgcmdsr

SUNWpkgcmdsu

SUNWswmt

Done!

bash-3.00# patchadd 121428-15

Validating patches...

Loading patches installed on the system...

Done!

Loading patches requested to install.

Done!

Checking patches that you specified for installation.

Done!

Approved patches will be installed in this order:

121428-15

Preparing checklist for non-global zone check...

Checking non-global zones...

This patch passes the non-global zone check.

121428-15

Summary for zones:

Zone zone1

Rejected patches:

None.

Patches that passed the dependency check:

121428-15

Patching global zone

Adding patches...

Checking installed patches...

Executing prepatch script...

Installing patch packages...

Patch 121428-15 has been successfully installed.

See /var/sadm/patch/121428-15/log for details

Executing postpatch script...

Patch packages installed:

SUNWluzone

Done!

Patching non-global zones...

Patching zone zone1

Adding patches...

Checking installed patches...

Executing prepatch script...

Installing patch packages...

Patch 121428-15 has been successfully installed.

See /var/sadm/patch/121428-15/log for details

Executing postpatch script...

Patch packages installed:

SUNWluzone

Done!

bash-3.00# patchadd 121430-68

Validating patches...

Loading patches installed on the system...

Done!

Loading patches requested to install.

Done!

Checking patches that you specified for installation.

Done!

Approved patches will be installed in this order:

121430-68

Preparing checklist for non-global zone check...

Checking non-global zones...

This patch passes the non-global zone check.

121430-68

Summary for zones:

Zone zone1

Rejected patches:

None.

Patches that passed the dependency check:

121430-68

Patching global zone

Adding patches...

Checking installed patches...

Executing prepatch script...

Installing patch packages...

Patch 121430-68 has been successfully installed.

See /var/sadm/patch/121430-68/log for details

Executing postpatch script...

Patch packages installed:

SUNWlucfg

SUNWlur

SUNWluu

Done!

Patching non-global zones...

Patching zone zone1

Adding patches...

Checking installed patches...

Executing prepatch script...

Installing patch packages...

Patch 121430-68 has been successfully installed.

See /var/sadm/patch/121430-68/log for details

Executing postpatch script...

Patch packages installed:

SUNWlucfg

SUNWlur

SUNWluu

Done!

Okay the patches are applied now. I'll reboot to be sure though I should not have to and I have that luxury since this is not Production yet.

Now it's time to create the Live Upgrade Environment for the upgrade from Solaris 10 u9 to Solaris 10 u10.:

bash-3.00# lustatus

ERROR: No boot environments are configured on this system

ERROR: cannot determine list of all boot environment names

bash-3.00# lucreate -n s10u10

Analyzing system configuration.

No name for current boot environment.

INFORMATION: The current boot environment is not named - assigning name <s10u9>.

Current boot environment is named <s10u9>.

Creating initial configuration for primary boot environment <s10u9>.

INFORMATION: No BEs are configured on this system.

The device </dev/dsk/c0t0d0s0> is not a root device for any boot environment; cannot get BE ID.

PBE configuration successful: PBE name <s10u9> PBE Boot Device </dev/dsk/c0t0d0s0>.

Updating boot environment description database on all BEs.

Updating system configuration files.

Creating configuration for boot environment <s10u10>.

Source boot environment is <s10u9>.

Creating file systems on boot environment <s10u10>.

Populating file systems on boot environment <s10u10>.

Temporarily mounting zones in PBE <s10u9>.

Analyzing zones.

WARNING: Directory </zonedata/zone1> zone <global> lies on a filesystem shared between BEs, remapping path to </zonedata/zone1-s10u10>.

WARNING: Device <zonedata/zone1> is shared between BEs, remapping to <zonedata/zone1-s10u10>.

Duplicating ZFS datasets from PBE to ABE.

Creating snapshot for <rpool/ROOT/s10u9> on <rpool/ROOT/s10u9@s10u10>.

Creating clone for <rpool/ROOT/s10u9@s10u10> on <rpool/ROOT/s10u10>.

Creating snapshot for <zonedata/zone1> on <zonedata/zone1@s10u10>.

Creating clone for <zonedata/zone1@s10u10> on <zonedata/zone1-s10u10>.

Mounting ABE <s10u10>.

Generating file list.

Finalizing ABE.

Fixing zonepaths in ABE.

Unmounting ABE <s10u10>.

Fixing properties on ZFS datasets in ABE.

Reverting state of zones in PBE <s10u9>.

Making boot environment <s10u10> bootable.

Population of boot environment <s10u10> successful.

Creation of boot environment <s10u10> successful.

bash-3.00# lustatus

Boot Environment Is Active Active Can Copy

Name Complete Now On Reboot Delete Status

-------------------------- -------- ------ --------- ------ ----------

s10u9 yes yes yes no -

s10u10 yes no no yes -

bash-3.00# zoneadm list -civ

ID NAME STATUS PATH BRAND IP

0 global running / native shared

1 zone1 running /zonedata/zone1 native shared

I'll need to install the LU packages from u10 in order to satisfy the Oracle.

I have my media on an autofs /net mount. I use the "installer" script.

I'll also need to create the autoreg file to stop the annoying Oracle tracking.

echo "auto_reg=disable" > /tmp/autoregfile

bash-3.00# luupgrade -u -n s10u10 -k /tmp/autoregfile -s /net/js/media/10u10

64459 blocks

miniroot filesystem is <lofs>

Mounting miniroot at </net/nadc-jss-p01/js/media/10u10/Solaris_10/Tools/Boot>

INFORMATION: Auto Registration already done for this BE <s10u10>.

Validating the contents of the media </net/js/media/10u10>.

The media is a standard Solaris media.

The media contains an operating system upgrade image.

The media contains <Solaris> version <10>.

Constructing upgrade profile to use.

Locating the operating system upgrade program.

Checking for existence of previously scheduled Live Upgrade requests.

Creating upgrade profile for BE <s10u10>.

Determining packages to install or upgrade for BE <s10u10>.

Performing the operating system upgrade of the BE <s10u10>.

CAUTION: Interrupting this process may leave the boot environment unstable or unbootable.

Upgrading Solaris: 100% completed

Installation of the packages from this media is complete.

Updating package information on boot environment <s10u10>.

Package information successfully updated on boot environment <s10u10>.

Adding operating system patches to the BE <s10u10>.

The operating system patch installation is complete.

INFORMATION: The file </var/sadm/system/logs/upgrade_log> on boot

environment <s10u10> contains a log of the upgrade operation.

INFORMATION: The file </var/sadm/system/data/upgrade_cleanup> on boot environment <s10u10> contains a log of cleanup operations required.

INFORMATION: Review the files listed above. Remember that all of the files are located on boot environment <s10u10>. Before you activate boot environment <s10u10>, determine if any additional system maintenance is required or if additional media of the software distribution must be installed.

The Solaris upgrade of the boot environment <s10u10> is complete.

Installing failsafe

Failsafe install is complete.

bash-3.00# lustatus

Boot Environment Is Active Active Can Copy

Name Complete Now On Reboot Delete Status

-------------------------- -------- ------ --------- ------ ----------

s10u9 yes yes yes no -

s10u10 yes no no yes -

bash-3.00# zoneadm list -civ

ID NAME STATUS PATH BRAND IP

0 global running / native shared

1 zone1 running /zonedata/zone1 native shared

set zonepath=/zonedata/zone1

set autoboot=true

set scheduling-class=FSS

set ip-type=shared

add inherit-pkg-dir

set dir=/lib

end

add inherit-pkg-dir

set dir=/platform

end

add inherit-pkg-dir

set dir=/sbin

end

add inherit-pkg-dir

set dir=/usr

end

add fs

set dir=/usr/local

set special=/opt/zone1/local

set type=lofs

end

add capped-memory

set physical=16G

set locked=8g

set swap=16g

end

add net

set address=10.10.10.100/24

set physical=bge0

set defrouter=10.10.10.1

end

Now build the zone via the template:

bash-3.00# zonecfg -z zone1 -f /var/tmp/zone1

bash-3.00# zonecfg -z zone1

zonecfg:zone1> info

zonename: zone1

zonepath: /zonedata/zone1

brand: native

autoboot: true

bootargs:

pool:

limitpriv:

scheduling-class: FSS

ip-type: shared

hostid:

inherit-pkg-dir:

dir: /lib

inherit-pkg-dir:

dir: /platform

inherit-pkg-dir:

dir: /sbin

inherit-pkg-dir:

dir: /usr

fs:

dir: /usr/local

special: /opt/zone1/local

raw not specified

type: lofs

options: []

net:

address: 10.10.10.100/24

physical: bge0

defrouter: 10.10.10.1

capped-memory:

physical: 16G

[swap: 16G]

[locked: 8G]

rctl:

name: zone.max-locked-memory

value: (priv=privileged,limit=8589934592,action=deny)

rctl:

name: zone.max-swap

value: (priv=privileged,limit=17179869184,action=deny)

Next step is to install the zone:

bash-3.00# zoneadm -z zone1 install

A ZFS file system has been created for this zone.

Preparing to install zone <zone1>.

Creating list of files to copy from the global zone.

Copying <3094> files to the zone.

Initializing zone product registry.

Determining zone package initialization order.

Preparing to initialize <1338> packages on the zone.

Initialized <1338> packages on zone.

Zone <zone1> is initialized.

The file </zonedata/zone1/root/var/sadm/system/logs/install_log> contains a log of the zone installation.

Last step is to copy a SYSIDCFG over to the zone:

bash-3.00# cat /var/tmp/sysidcfg

timezone=US/Eastern

system_locale=en_US.UTF-8

terminal=vt100

network_interface=PRIMARY {hostname=zone1}

root_password=QBfUglMMOVERw

security_policy=none

name_service=NONE

nfs4_domain=dynamic

bash-3.00# ls -l /zonedata/zone1/root/etc/sysidcfg

-rw-r--r-- 1 root root 191 Dec 27 10:30

/zonedata/zone1/root/etc/sysidcfg

Okay now we are ready to boot the zone:

[Connected to zone 'zone1' console]

Hostname: zone1

Loading smf(5) service descriptions: 155/155

Reading ZFS config: done.

Creating new rsa public/private host key pair

Creating new dsa public/private host key pair

Configuring network interface addresses: bge0.

rebooting system due to change(s) in /etc/default/init

[NOTICE: Zone rebooting]

SunOS Release 5.10 Version Generic_142909-17 64-bit

Copyright (c) 1983, 2010, Oracle and/or its affiliates. All rights reserved.

Hostname: zone1

Reading ZFS config: done.

zone1 console login: Dec 27 13:32:34 zone1 sendmail[8797]: My unqualified host name (zone1) unknown; sleeping for retry

Dec 27 13:32:34 zone1 sendmail[8801]: My unqualified host name (zone1) unknown; sleeping for retry

root

Password:

Dec 27 13:32:37 zone1 login: ROOT LOGIN /dev/console

Oracle Corporation SunOS 5.10 Generic Patch January 2005

Okay the zone is running now:

bash-3.00# uname -aSunOS tais-m4k-d01 5.10 Generic_142909-17 sun4u sparc SUNW,SPARC-Enterprise

bash-3.00# zoneadm list -civ

ID NAME STATUS PATH BRAND IP

0 global running / native shared

2 zone1 running /zonedata/zone1 native shared

Step 4.

Solaris 10 requires some patches before we can use LiveUpgrade.

Per Oracle Document: 1004881.1

SPARC:

- 119254-LR Install and Patch Utilities Patch

- 121430-57 Live Upgrade patch

- 121428-LR SUNWluzone required patches

- 138130-01 vold patch, However 141444-09 obsoletes this patch

bash-3.00# patchadd 119254-82

Validating patches...

Loading patches installed on the system...

Done!

Loading patches requested to install.

Done!

Checking patches that you specified for installation.

Done!

Approved patches will be installed in this order:

119254-82

Preparing checklist for non-global zone check...

Checking non-global zones...

This patch passes the non-global zone check.

119254-82

Summary for zones:

Zone zone1

Rejected patches:

None.

Patches that passed the dependency check:

119254-82

Patching global zone

Adding patches...

Checking installed patches...

Executing prepatch script...

Installing patch packages...

Patch 119254-82 has been successfully installed.

See /var/sadm/patch/119254-82/log for details

Executing postpatch script...

Patch packages installed:

SUNWinstall-patch-utils-root

SUNWpkgcmdsr

SUNWpkgcmdsu

SUNWswmt

Done!

Patching non-global zones...

Patching zone zone1

Adding patches...

Checking installed patches...

Executing prepatch script...

Installing patch packages...

Patch 119254-82 has been successfully installed.

See /var/sadm/patch/119254-82/log for details

Executing postpatch script...

Patch packages installed:

SUNWinstall-patch-utils-root

SUNWpkgcmdsr

SUNWpkgcmdsu

SUNWswmt

Done!

bash-3.00# patchadd 121428-15

Validating patches...

Loading patches installed on the system...

Done!

Loading patches requested to install.

Done!

Checking patches that you specified for installation.

Done!

Approved patches will be installed in this order:

121428-15

Preparing checklist for non-global zone check...

Checking non-global zones...

This patch passes the non-global zone check.

121428-15

Summary for zones:

Zone zone1

Rejected patches:

None.

Patches that passed the dependency check:

121428-15

Patching global zone

Adding patches...

Checking installed patches...

Executing prepatch script...

Installing patch packages...

Patch 121428-15 has been successfully installed.

See /var/sadm/patch/121428-15/log for details

Executing postpatch script...

Patch packages installed:

SUNWluzone

Done!

Patching non-global zones...

Patching zone zone1

Adding patches...

Checking installed patches...

Executing prepatch script...

Installing patch packages...

Patch 121428-15 has been successfully installed.

See /var/sadm/patch/121428-15/log for details

Executing postpatch script...

Patch packages installed:

SUNWluzone

Done!

bash-3.00# patchadd 121430-68

Validating patches...

Loading patches installed on the system...

Done!

Loading patches requested to install.

Done!

Checking patches that you specified for installation.

Done!

Approved patches will be installed in this order:

121430-68

Preparing checklist for non-global zone check...

Checking non-global zones...

This patch passes the non-global zone check.

121430-68

Summary for zones:

Zone zone1

Rejected patches:

None.

Patches that passed the dependency check:

121430-68

Patching global zone

Adding patches...

Checking installed patches...

Executing prepatch script...

Installing patch packages...

Patch 121430-68 has been successfully installed.

See /var/sadm/patch/121430-68/log for details

Executing postpatch script...

Patch packages installed:

SUNWlucfg

SUNWlur

SUNWluu

Done!

Patching non-global zones...

Patching zone zone1

Adding patches...

Checking installed patches...

Executing prepatch script...

Installing patch packages...

Patch 121430-68 has been successfully installed.

See /var/sadm/patch/121430-68/log for details

Executing postpatch script...

Patch packages installed:

SUNWlucfg

SUNWlur

SUNWluu

Done!

Okay the patches are applied now. I'll reboot to be sure though I should not have to and I have that luxury since this is not Production yet.

Now it's time to create the Live Upgrade Environment for the upgrade from Solaris 10 u9 to Solaris 10 u10.:

bash-3.00# lustatus

ERROR: No boot environments are configured on this system

ERROR: cannot determine list of all boot environment names

bash-3.00# lucreate -n s10u10

Analyzing system configuration.

No name for current boot environment.

INFORMATION: The current boot environment is not named - assigning name <s10u9>.

Current boot environment is named <s10u9>.

Creating initial configuration for primary boot environment <s10u9>.

INFORMATION: No BEs are configured on this system.

The device </dev/dsk/c0t0d0s0> is not a root device for any boot environment; cannot get BE ID.

PBE configuration successful: PBE name <s10u9> PBE Boot Device </dev/dsk/c0t0d0s0>.

Updating boot environment description database on all BEs.

Updating system configuration files.

Creating configuration for boot environment <s10u10>.

Source boot environment is <s10u9>.

Creating file systems on boot environment <s10u10>.

Populating file systems on boot environment <s10u10>.

Temporarily mounting zones in PBE <s10u9>.

Analyzing zones.

WARNING: Directory </zonedata/zone1> zone <global> lies on a filesystem shared between BEs, remapping path to </zonedata/zone1-s10u10>.

WARNING: Device <zonedata/zone1> is shared between BEs, remapping to <zonedata/zone1-s10u10>.

Duplicating ZFS datasets from PBE to ABE.

Creating snapshot for <rpool/ROOT/s10u9> on <rpool/ROOT/s10u9@s10u10>.

Creating clone for <rpool/ROOT/s10u9@s10u10> on <rpool/ROOT/s10u10>.

Creating snapshot for <zonedata/zone1> on <zonedata/zone1@s10u10>.

Creating clone for <zonedata/zone1@s10u10> on <zonedata/zone1-s10u10>.

Mounting ABE <s10u10>.

Generating file list.

Finalizing ABE.

Fixing zonepaths in ABE.

Unmounting ABE <s10u10>.

Fixing properties on ZFS datasets in ABE.

Reverting state of zones in PBE <s10u9>.

Making boot environment <s10u10> bootable.

Population of boot environment <s10u10> successful.

Creation of boot environment <s10u10> successful.

Okay this looks good I think. The zone is still running and I have 2 BE's.

bash-3.00# lustatus

Boot Environment Is Active Active Can Copy

Name Complete Now On Reboot Delete Status

-------------------------- -------- ------ --------- ------ ----------

s10u9 yes yes yes no -

s10u10 yes no no yes -

bash-3.00# zoneadm list -civ

ID NAME STATUS PATH BRAND IP

0 global running / native shared

1 zone1 running /zonedata/zone1 native shared

I'll need to install the LU packages from u10 in order to satisfy the Oracle.

I have my media on an autofs /net mount. I use the "installer" script.

I'll also need to create the autoreg file to stop the annoying Oracle tracking.

echo "auto_reg=disable" > /tmp/autoregfile

Okay that should about do it. I can now upgrade to Update 10 with a running zone with an lofs filesystem. (Allegedly)

bash-3.00# luupgrade -u -n s10u10 -k /tmp/autoregfile -s /net/js/media/10u10

64459 blocks

miniroot filesystem is <lofs>

Mounting miniroot at </net/nadc-jss-p01/js/media/10u10/Solaris_10/Tools/Boot>

INFORMATION: Auto Registration already done for this BE <s10u10>.

Validating the contents of the media </net/js/media/10u10>.

The media is a standard Solaris media.

The media contains an operating system upgrade image.

The media contains <Solaris> version <10>.

Constructing upgrade profile to use.

Locating the operating system upgrade program.

Checking for existence of previously scheduled Live Upgrade requests.

Creating upgrade profile for BE <s10u10>.

Determining packages to install or upgrade for BE <s10u10>.

Performing the operating system upgrade of the BE <s10u10>.

CAUTION: Interrupting this process may leave the boot environment unstable or unbootable.

Upgrading Solaris: 100% completed

Installation of the packages from this media is complete.

Updating package information on boot environment <s10u10>.

Package information successfully updated on boot environment <s10u10>.

Adding operating system patches to the BE <s10u10>.

The operating system patch installation is complete.

INFORMATION: The file </var/sadm/system/logs/upgrade_log> on boot

environment <s10u10> contains a log of the upgrade operation.

INFORMATION: The file </var/sadm/system/data/upgrade_cleanup> on boot environment <s10u10> contains a log of cleanup operations required.

INFORMATION: Review the files listed above. Remember that all of the files are located on boot environment <s10u10>. Before you activate boot environment <s10u10>, determine if any additional system maintenance is required or if additional media of the software distribution must be installed.

The Solaris upgrade of the boot environment <s10u10> is complete.

Installing failsafe

Failsafe install is complete.

bash-3.00# lustatus

Boot Environment Is Active Active Can Copy

Name Complete Now On Reboot Delete Status

-------------------------- -------- ------ --------- ------ ----------

s10u9 yes yes yes no -

s10u10 yes no no yes -

bash-3.00# zoneadm list -civ

ID NAME STATUS PATH BRAND IP

0 global running / native shared

1 zone1 running /zonedata/zone1 native shared

bash-3.00# luactivate s10u10

A Live Upgrade Sync operation will be performed on startup of boot environment <s10u10>.

**********************************************************************

The target boot environment has been activated. It will be used when you reboot. NOTE: You MUST NOT USE the reboot, halt, or uadmin commands. You MUST USE either the init or the shutdown command when you reboot. If you do not use either init or shutdown, the system will not boot using the target BE.

**********************************************************************

In case of a failure while booting to the target BE, the following process needs to be followed to fallback to the currently working boot environment:

1. Enter the PROM monitor (ok prompt).

2. Boot the machine to Single User mode using a different boot device

(like the Solaris Install CD or Network). Examples:

At the PROM monitor (ok prompt):

For boot to Solaris CD: boot cdrom -s

For boot to network: boot net -s

3. Mount the Current boot environment root slice to some directory (like

/mnt). You can use the following commands in sequence to mount the BE:

zpool import rpool

zfs inherit -r mountpoint rpool/ROOT/s10u9

zfs set mountpoint=<mountpointName> rpool/ROOT/s10u9

zfs mount rpool/ROOT/s10u9

4. Run <luactivate> utility with out any arguments from the Parent boot

environment root slice, as shown below:

<mountpointName>/sbin/luactivate

5. luactivate, activates the previous working boot environment and

indicates the result.

6. Exit Single User mode and reboot the machine.

**********************************************************************

Modifying boot archive service

Activation of boot environment <s10u10> successful.

okay so I rebooted into the newly upgraded BE of Solaris 10 u10.

I checked my zone status and it does not look good initially:

bash-3.2# zoneadm list -civ

ID NAME STATUS PATH BRAND IP

0 global running / native shared

- zone1 installed /zonedata/zone1-s10u10 native shared

What is this "-s10u10" on my zone????

I don't know but I'll try to boot the zone:

bash-3.2# zoneadm -z zone1 boot

zoneadm: zone 'zone1': zone is already booted

Strange. Looks like some initial cleanup perhaps and then it booted okay. This is starting to look good.

Now I see what I would expect to see:

bash-3.2# zoneadm list -civ

ID NAME STATUS PATH BRAND IP

0 global running / native shared

5 zone1 running /zonedata/zone1 native shared

bash-3.2# cat /etc/release

Oracle Solaris 10 8/11 s10s_u10wos_17b SPARC

Copyright (c) 1983, 2011, Oracle and/or its affiliates. All rights reserved.

Assembled 23 August 2011

bash-3.2# lustatus

Boot Environment Is Active Active Can Copy

Name Complete Now On Reboot Delete Status

-------------------------- -------- ------ --------- ------ ----------

s10u9 yes no no yes -

s10u10 yes yes yes no -